Let’s get real about GraphQL performance: you got p99 problems but your p50 ain’t one

Don't know your GraphQL API's p99 response time? That's why we built GraphQL analytics into Stellate Analytics! Sign up (it’s free for 14 days!) and start learning about your GraphQL API’s performance today.

We live in a world adapted to speed. All the way back in 2010, Amazon saw that 100 milliseconds load times cost them 1% in sales. Fast forward to 2017, when Akamai found that the same delay cost 7% in conversion rates. Yikes! And if you like trampolines, check out this 2018 stat from Google, who found that a slower page load time increase increases the bounce rate by at least 32% up to 123%!

If you want to know if your GraphQL API is fast, stop looking at your p50 response times. Yes, seriously, just stop looking at it and look at your p99 response times instead. Why?

Across the billions of GraphQL requests that the Stellate Edge Cache has seen in our network, we’ve noticed one peculiar fact: for every page view our average customer gets, they do between 5 and 10 GraphQL requests. There are outliers on both sides (for example Relay users only have one GraphQL request per page view), but this is usually in the right ballpark.

That, in turn, means that your p50 GraphQL response time is completely irrelevant. 96%+ of your users will experience an overall loading time worse than your p50 response time. If you were feeling proud about your super fast p50, shift your attention to the metric that matters – your p99 response times.

Wait, what? How can 96% of users experience a worse response time than p50?

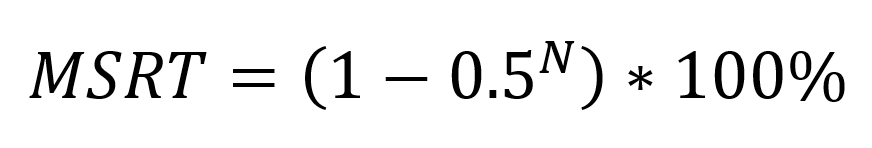

Back in 2014, Gil Tene showed a similar connection between the median (p50) server response times and page load times. The formula to calculate how many of your users will hit a single request that is slower is as follows:

Where MSRT is the ‘Median Server Response Time’ and N is the number of resource requests.

What does that mean for my GraphQL API’s response time?

As we’ve said above, across our network websites that use GraphQL APIs usually send 5-10 GraphQL requests per page view. Given that, if you enter those numbers into the formula above you will see that:

With 5 requests per page view, 96.875% of page views will experience an overall loading time worse than the p50 response time ((1 – 0.5 ^ 5) * 100%)

With 10 requests per page view, 99.9% of page views will experience an overall loading time worse than the p50 response time ((1 – 0.5 ^ 10) * 100%)

See how using p50 can be misleading?

Here's another crazy stat: even at p95, which feels like "Oh yeah, only the outliers are slower", 40% of page views will have an overall slower loading time than the p95 metric with 10 requests per pageview! (Warning: we may run out of exclamation marks!)

Only at p99 does the number start matching the outlier-ness:

With 5 requests per pageview, only 5% of page views will be slower than the p99 metric. ((1 – 0.99 ^ 5) * 100%)

With 10 requests per pageview, less than 1% of page views will be slower than the p99 metric. ((1 – 0.99 ^ 10) * 100%)

The takeaway? Focus on improving your p99 GraphQL response time. (See? No more exclamation marks.)